Guardrails vs. Decision Plane

Memrail Team • January 13, 2026

Guardrails Are Not Enough

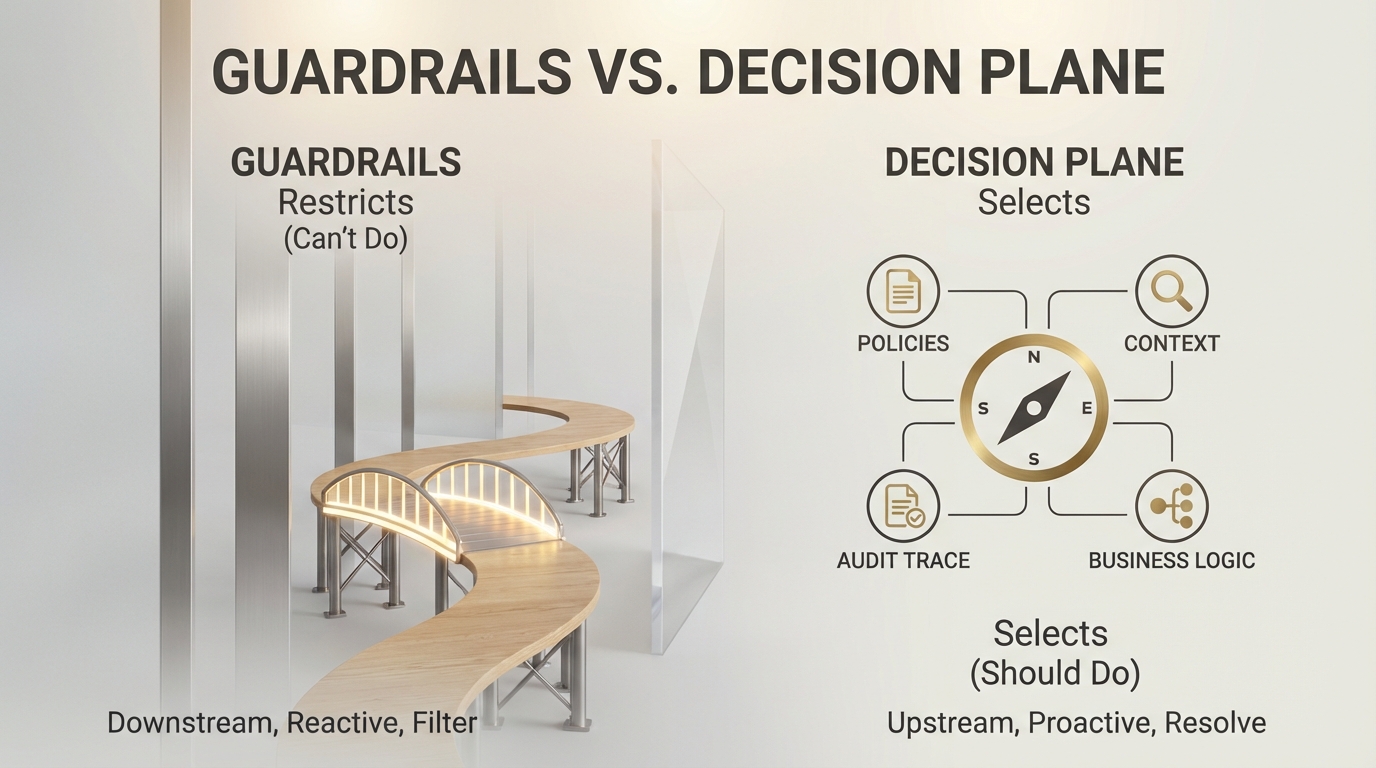

Guardrails are about what you can't do. Decision Planes are about what you should do.

One restricts. The other selects.

This distinction matters more than it might seem. The AI industry has settled on guardrails, system prompts, and ad hoc glue code as the de facto answer to governing AI behavior. But this patchwork only solves half the problem, and as AI systems evolve from chatbots to agents, it's increasingly the wrong half.

The Guardrails Mental Model

Guardrails emerged from a simple need: LLMs say things they shouldn't. They hallucinate, leak PII, generate toxic content, or confidently provide dangerous instructions. The solution was to add a filter, something that inspects outputs and blocks the bad ones.

This model treats governance as a downstream concern. The AI does its thing, and then a checkpoint asks: was that okay?

It's reactive by design. And for content safety, it works reasonably well. But agents break this model.

What Guardrails Can't Do

Consider an AI agent that manages customer accounts. It can issue refunds, escalate tickets, modify subscriptions, and access purchase history. The interesting questions aren't about output toxicity. They're about decisions:

Should this agent issue a refund right now, for this customer, given their history, the amount, the current policy, and the fact that they've already received two refunds this quarter?

Guardrails have no answer here. There's nothing to filter. The agent needs to decide, and that decision involves resolving multiple constraints, applying business logic, respecting permissions, and producing an auditable trace of why it chose what it chose.

This isn't a content safety problem. It's a governance problem. And it lives upstream of generation, not downstream.

The Decision Plane

A Decision Plane is an architectural layer that owns decision logic. It sits between intent and execution, determining what action should be taken given the current context, constraints, and policies.

Where guardrails ask "is this output acceptable?", a Decision Plane asks "what should happen?"

A Decision Plane doesn't filter. It resolves. It takes candidate actions, applies policies, enforces constraints, handles precedence when rules conflict, and produces a decision along with a trace explaining how it got there.

This makes decision logic a first-class artifact: something that can be inspected, tested, versioned, and modified without touching application code. Policies become decoupled. Behavior becomes deterministic. Decisions become auditable.

Different Positions in the Stack

The easiest way to understand the difference is by where each sits:

Guardrails are positioned after generation. Content is produced, then checked. If it fails, it's blocked or regenerated. The system has already decided what to do. Guardrails just validate the output.

A Decision Plane is positioned before execution. It determines what action to take before anything happens. The decision itself is the output, not a check on some other output.

This means guardrails and Decision Planes aren't competing concepts. They're complementary layers. In a well-architected system, guardrails become one type of constraint that a Decision Plane enforces, alongside permissions, business rules, rate limits, compliance requirements, and everything else that governs behavior.

Why This Matters Now

When AI systems only generated text, guardrails were a reasonable answer. The failure mode was bad content, and the solution was content filtering.

But agents change the equation. An agent that can execute code, call APIs, move money, or modify data has failure modes that guardrails can't catch. The risk isn't just saying something wrong. It's doing something wrong. And by the time you're filtering outputs, the action may have already happened.

Agents need a layer that governs decisions before execution. They need clear logic about what they should do, applied consistently, with an audit trail. They need policies that can evolve without redeploying code. They need a way to explain why they did what they did.

They need a Decision Plane.

The Uncomfortable Implication

If you accept this framing, most AI systems today are undergoverned. They have guardrails, maybe good ones, but they lack a coherent layer for decision governance. Decision logic is embedded: scattered across prompts, hardcoded in orchestration, buried in application code. When something goes wrong, figuring out why is forensic work.

This is fine for demos and prototypes. It's not fine for systems that do real things in the real world.

The companies that figure out decision governance will be able to deploy agents with confidence. The ones that don't will keep bolting on guardrails and hoping for the best.

Guardrails were a good start. But they were never the whole answer.